Optimizing AI Infrastructure: A Strategy for Physically Separating Models

- Джимшер Челидзе

- Aug 8, 2025

- 3 min read

In recent years, many companies have realized that artificial intelligence has ceased to be an experimental technology and has become a real tool for competitive advantage. At the same time, not everyone is ready to build their own cloud AI platforms - whether due to information security requirements, risks of data leakage, high cost of cloud resources or the need for complete control over the infrastructure. More and more often, businesses are looking for local, autonomous solutions that operate within the corporate perimeter.

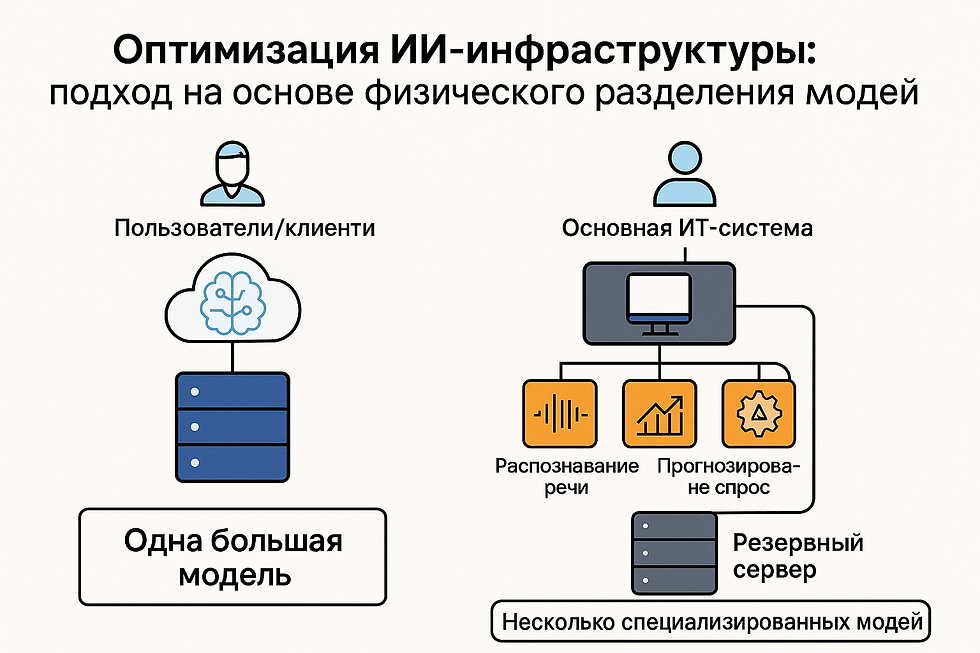

Therefore, businesses are increasingly opting for local, autonomous AI solutions that operate within the corporate perimeter and with internal databases/documents. And here the key question arises: should the system be built according to the traditional scheme - on one powerful server with one universal large model, or should a modern modular architecture be implemented, in which several specialized models operate independently?

We promote the second approach — creating a set of medium-sized AI models (20–30 billion parameters) , each optimized for a specific application task. These models are deployed on dedicated server “islands” with an optimal hardware configuration. This approach combines scalability, fine-tuning for the task, resilience to failures, transparent cost control, and a high level of security.

Architectural principle: specialized "islands" for each task

The strategy assumes the following order of deployment of AI solutions:

Business challenge identification – identifying a specific operational or strategic challenge where AI application delivers measurable value (customer sentiment analysis, predictive equipment maintenance, support automation, supply chain optimization).

Allocation of a server resource - a separate physical server or a highly isolated virtual machine with guaranteed resources (CPU, GPU, RAM, storage) is allocated for the selected task and model.

Precise configuration for the purpose - the equipment is configured solely based on the requirements of the model and load profile.

Isolated execution - the model runs in its own environment without affecting other AI components, while maintaining the necessary interfaces for integration.

Architecture diagram

Key Benefits

Increased efficiency and simplified scaling

Optimization of resources through precise selection of configurations for each task.

No competition for computing power between models.

Scaling only the required “island” as the load increases.

Isolated update and replacement of models without risk to other systems.

Transparency of the financial model and ROI

Clear attribution of equipment and support costs to a specific task.

Measuring performance in relation to business metrics.

Possibility of accurate calculation of return on investment.

ROI calculation example

Savings from predicting equipment failures - 5,000,000 ₽/year .

Annual server maintenance — 200,000 ₽ .

Integration and development — 3,000,000 ₽ (one-time).

Step 1. Annual benefit = 5,000,000 − 200,000 = 4,800,000 ₽ .

Step 2. ROI of the first year = (4,800,000 − 3,000,000) / 3,000,000 × 100% = (1,800,000 / 3,000,000) × 100% ≈ 60% .

Conclusion: the project pays for itself in the first year and already in the first year brings positive financial results, while subsequent years provide net savings of about 4.8 million ₽ annually .

Strengthening information security

Isolation of data and computational processes between tasks.

Fine-grained access control based on the principle of least privilege.

Reduced attack surface: hacking one server will not compromise the entire system.

Localization of data processing facilitates compliance with GDPR, CCPA, Federal Law No. 152, and other regulations.

Improving fault tolerance

Localization of incidents: failure of one “island” affects only its function.

According to statistics, about 3% of servers fail monthly; with a modular architecture, this does not paralyze the entire platform.

Fast recovery using a backup server (N+1 strategy).

Managed Complexities

The need for mature DevOps practices and orchestration tools (e.g. Kubernetes).

The risk of underutilization of resources under variable load is solved by virtualization and containerization.

Higher initial investment but lower total cost of ownership (TCO) in the long run.

Potential network latency with frequent inter-model exchanges - requires a fast network loop.

Optimal application scenarios

Critical business processes.

Processing sensitive data.

Models with heterogeneous resource requirements.

Projects with strict requirements for cost transparency and ROI.

Integration with legacy systems where a single AI platform is difficult.

Alternative approaches

Cloud GPU/TPU services - flexibility and speed of deployment, but security issues and vendor dependencies.

AI/ML-enabled Kubernetes clusters are a balancing act between isolation and efficient resource use that requires a high level of expertise.

Conclusion

Physically separating AI models onto dedicated servers is not just a technical solution, but a strategic choice. It provides manageability, security, fault tolerance, and cost transparency. For organizations where reliability, data protection, and the economic feasibility of AI initiatives are critical, this architecture becomes optimal.